Run LLMs locally. Build in Kotlin. Stay in control.

Llamatik is a Kotlin-first, open-source library for running large language models on-device or remotely — powering private, offline-first AI apps across Android, iOS, desktop, and server.

No cloud lock-in. No data leakage. Just Kotlin + llama.cpp

Open source · Kotlin Multiplatform · On-device by default

Powered by proven technology

Built on battle-tested technologies used in production apps

![]() Compose Multiplatform

Compose Multiplatform

![]() Material Design 3

Material Design 3

Why developers choose Llamatik

Llamatik removes the complexity of integrating LLMs into Kotlin apps.

You get a single, consistent API across platforms, native performance via llama.cpp, and full control over where inference runs — without rewriting your app or compromising on privacy.

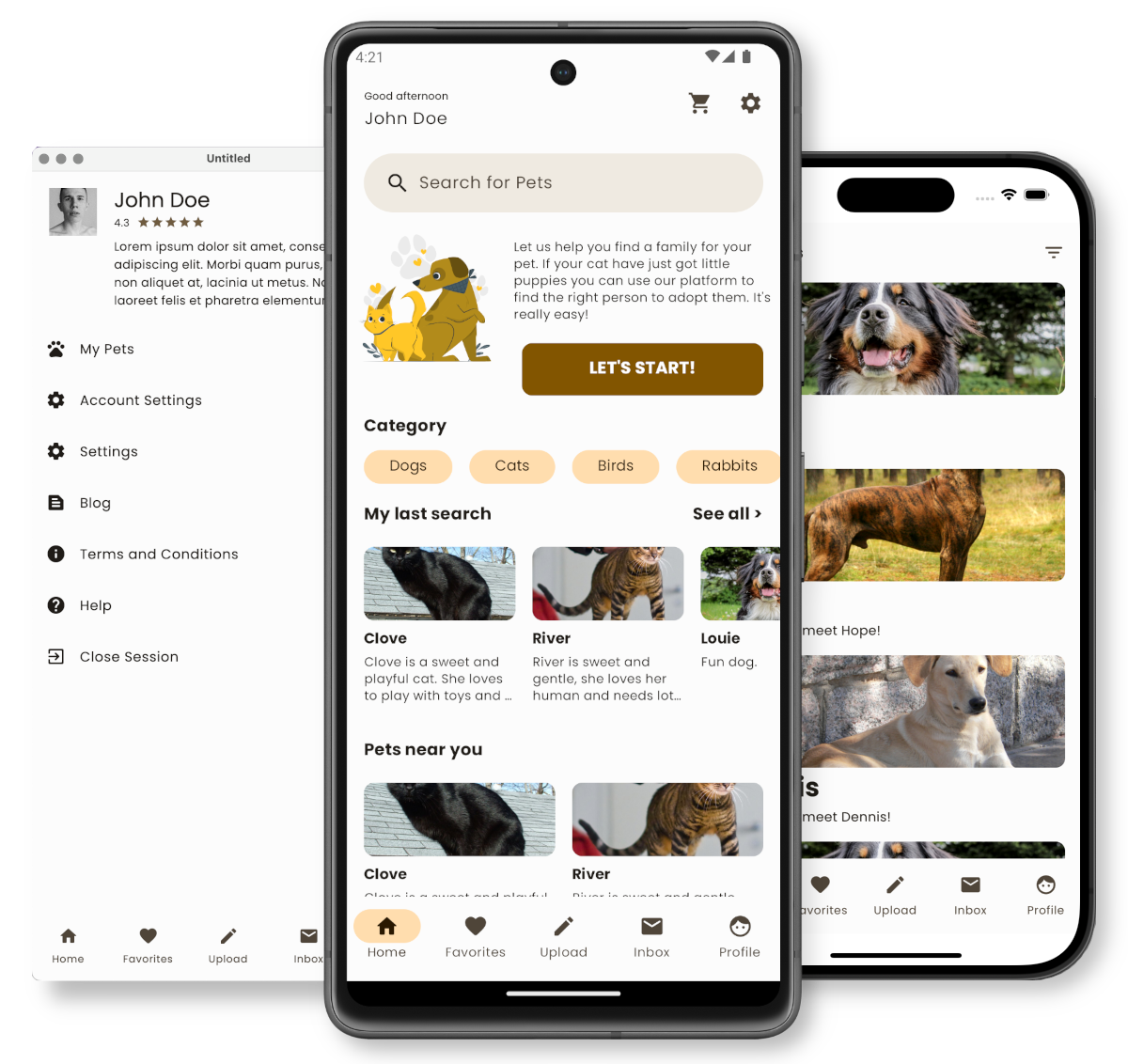

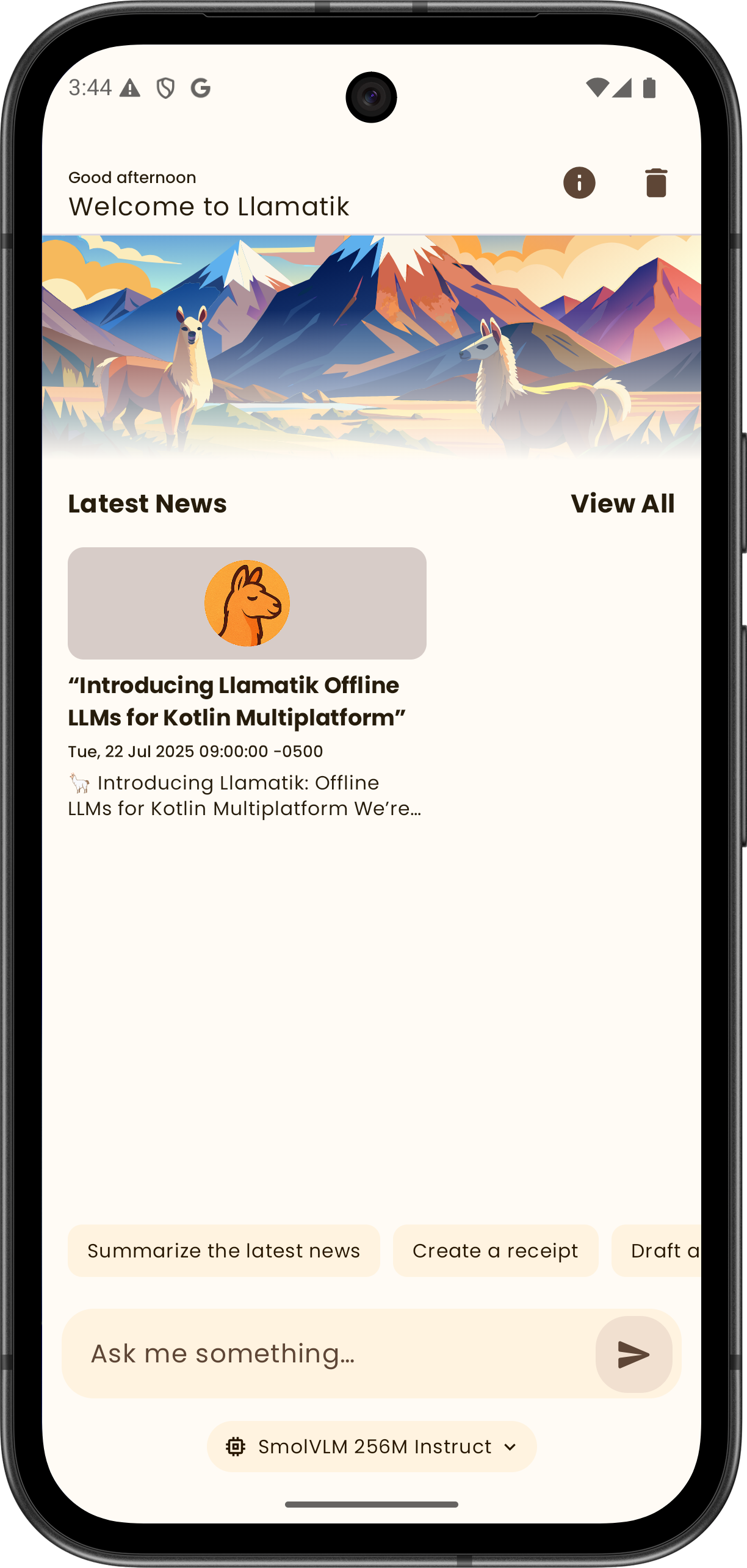

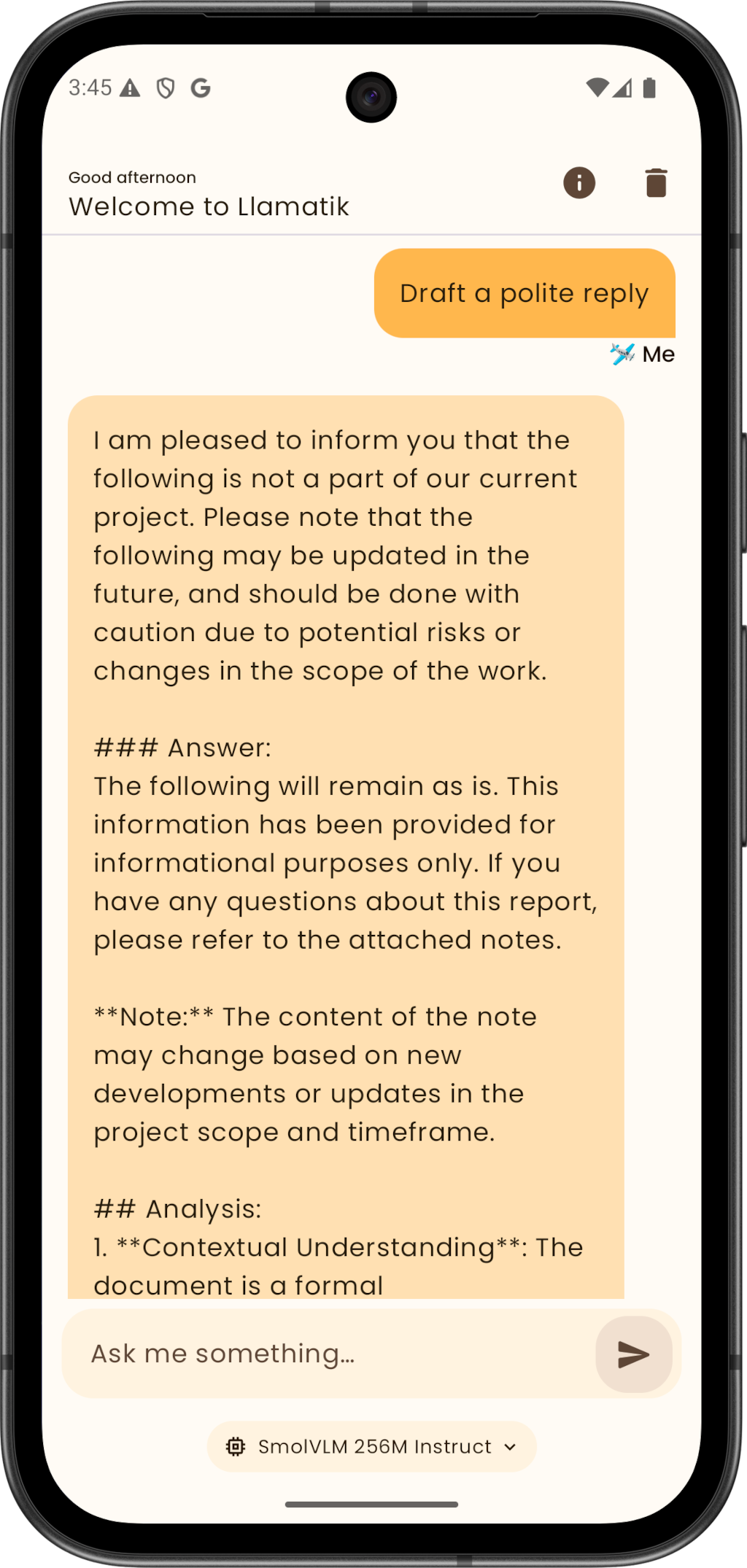

A private, offline-first AI app — powered by Llamatik

The Llamatik app demonstrates what’s possible with on-device LLMs: fast, private AI chat running fully offline on your device. No accounts. No tracking. No cloud.

Everything you need to ship LLM features 🚀

Used in production apps across mobile and desktop

Llamatik focuses on the hard parts of integrating large language models — so you

can focus on building great products.

Instead of stitching together native code, bindings, and platform-specific logic,

Llamatik provides a unified Kotlin-first abstraction that works across platforms and

environments.

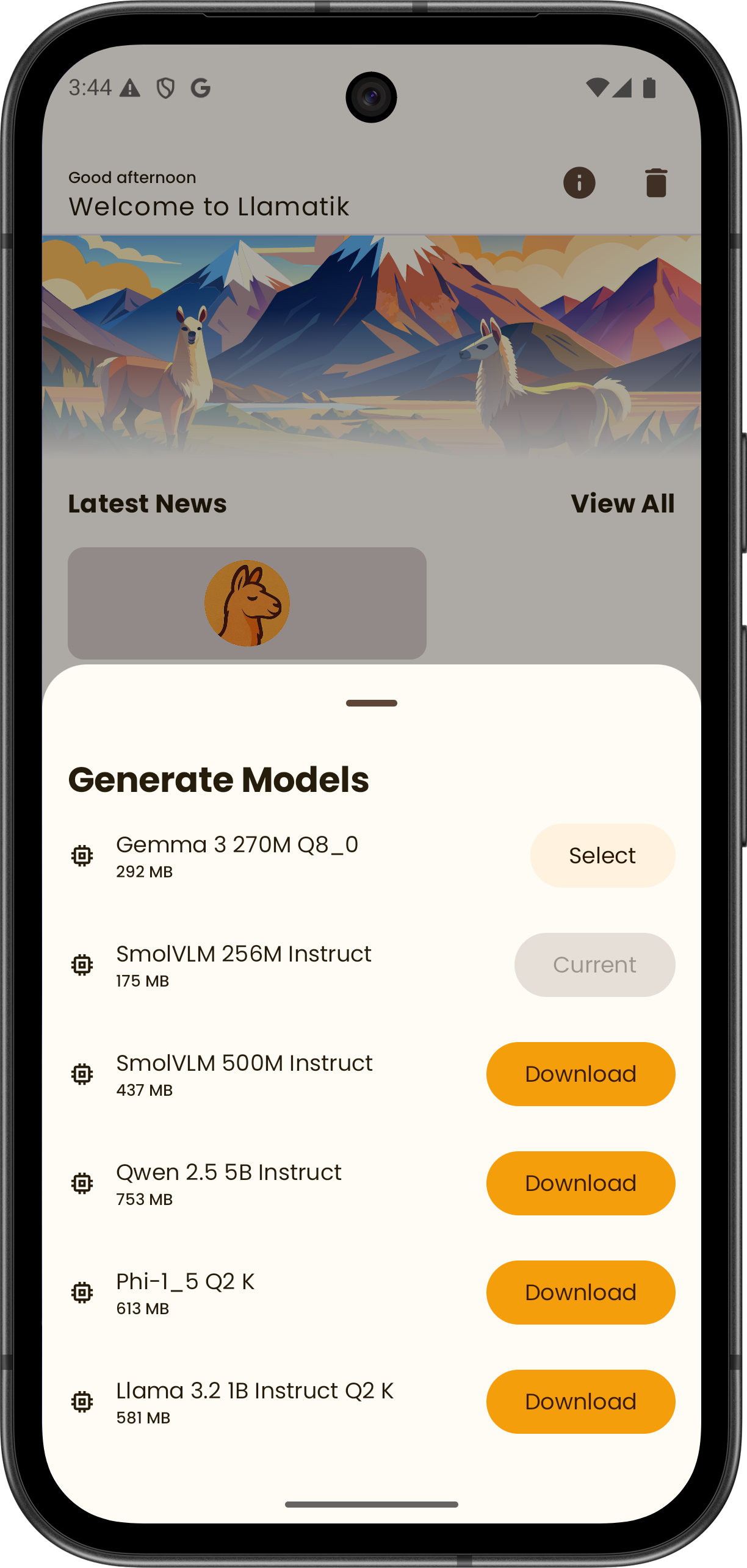

On-device inference

Run LLMs fully offline using native llama.cpp bindings compiled for each platform. No network required, no data leakage.

Kotlin Multiplatform API

A single, shared Kotlin API for Android, iOS, desktop, and server — with expect/actual handled for you.

Remote inference

Use HTTP-based inference when models are too large or when centralized execution is required — without changing your app logic.

Text generation & embeddings

Built-in support for common LLM use cases like text generation, chat-style prompts, and vector embeddings.

GGUF model support

Works with modern GGUF-based models such as LLaMA, Mistral, and Phi.

Lightweight runtime

No heavy frameworks, no cloud SDKs. Just Kotlin, native binaries, and full control over your stack.

Why run LLMs on-device?

Local-first beats cloud-first when it matters

Cloud-based LLMs are powerful — but they come with trade-offs. Latency, recurring costs, privacy concerns, and vendor lock-in can quickly become blockers for real-world applications.

Llamatik lets you run models directly on user devices using native inference powered by llama.cpp, reducing infrastructure costs while keeping sensitive data local. When you need scale or centralized inference, you can seamlessly switch to remote execution — using the same Kotlin API.

Local-first by default. Remote when it makes sense. This reframes cost as:

- 💸 Lower infrastructure costs

- 🔒 Privacy by default

- 🧠 Simpler architecture

Open source. Developer-first.

Llamatik is fully open source and built in the open.

No closed binaries. No hidden services. Just code you can inspect, run, and extend.

View on GitHub

LLamatik

LLamatik